Algorithmic limitations and human biases in an AI image generator,

2022 was the year of AI image generators.

Over the past few years, these machine learning systems have been modified and refined,

It has undergone multiple iterations to find its current popularity with the everyday internet user.

These image generators – DALL-E and Midjourney are arguably the most prominent –

they generate images from a variety of text prompts,

For example allowing people to create conceptual representations of future, present and past constructs.

But because we exist in a digital landscape riddled with human biases,

navigating these image generators requires careful reflection.

Midjourney

Midjourney is a particularly interesting AI tool that has proven popular with artists and designers alike.

Because of its fanciful, sketch-like imagery created from sometimes very little text prompts.

But the results obtained with this tool also raise complex questions related to image making and design.

These are questions that come to the fore when claims such as “African architecture” are used to produce images.

The term “African architecture” itself is quite controversial –

a continent of nations with distinct architectural styles of practice.

There has been much discussion in the past, and is still going on,

about the usefulness of some geographical signs such as “Africa south of the Sahara”,

There were many discussions about the harmful framework for the African continent as one country.

At the same time, the history of European colonialism on the continent led to blocs of

African states sharing similar colonial and post-colonial infrastructures.

This sometimes entails grouping selected African countries under a common typology

—such as the similarities found in the colonial and tropical modernist structures of independence-era Ghana and Nigeria.

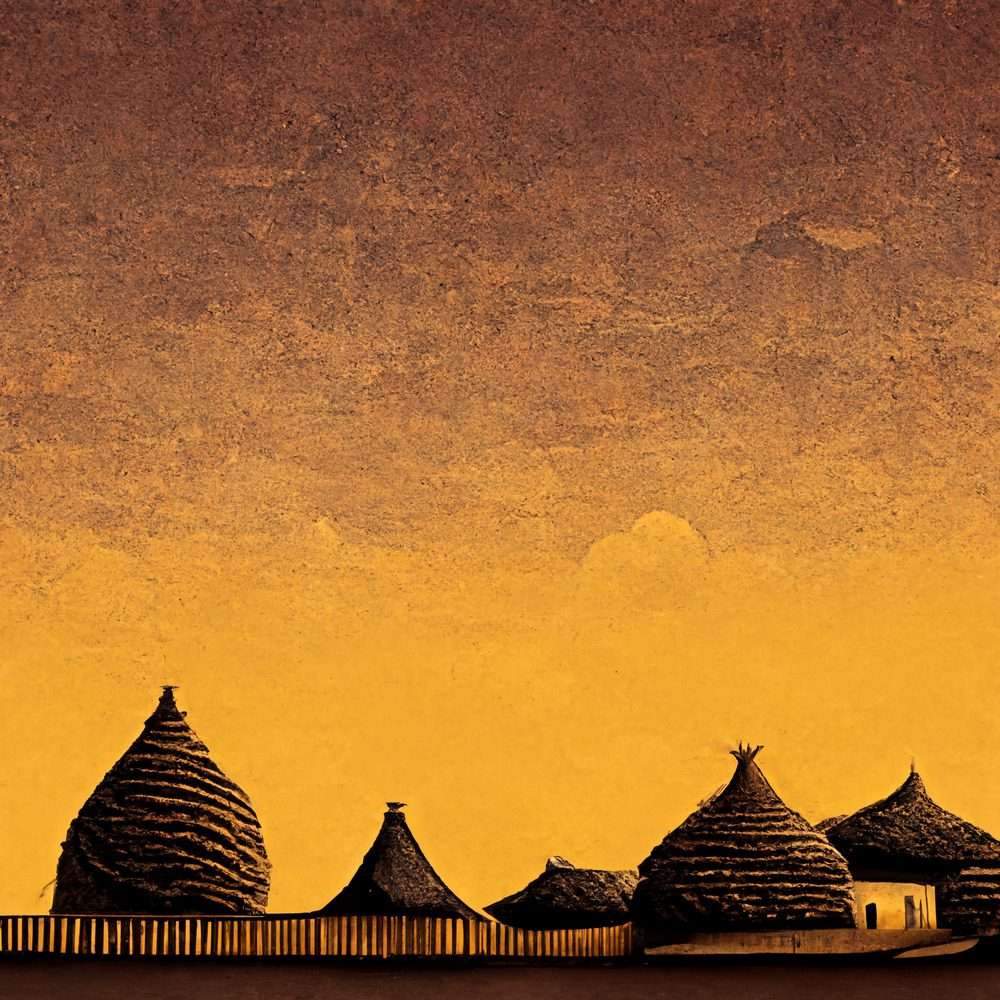

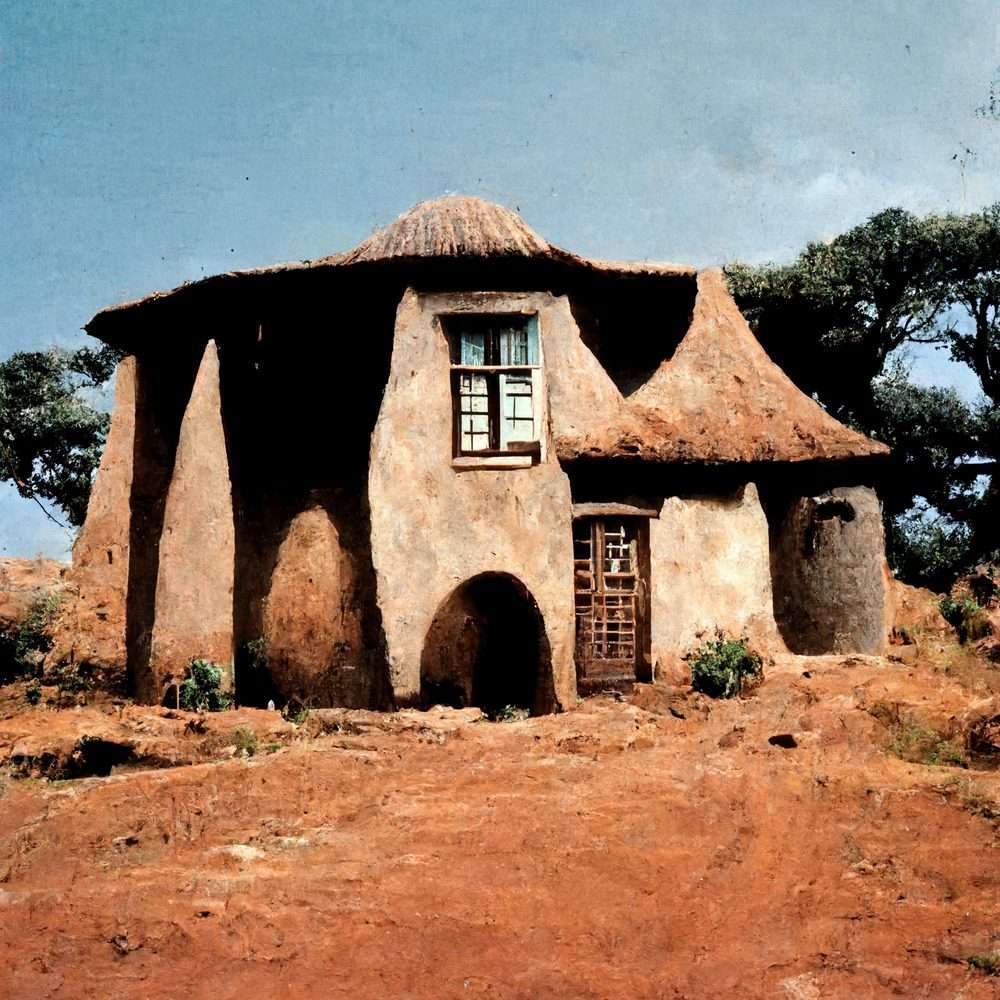

At Midjourney, writing in a brisk ‘African architecture’ produced images of hut-like forms,

surmounted by what looked like thatched roofs in a seemingly rustic setting.

The rapid “Vernacular Architecture of Africa” produced picturesque landscapes,

hut-like buildings with acacia trees in the background,

And the reddish-brown earth is in the foreground.

It is clear that these forms are common throughout the continent –

Examples of traditional Sukuma architecture are found in the Bogora Museum in Mwanza,

Tanzania, to the rondeville huts in South Africa.

But despite these general prompts – there is a distinct lack of diversity in the type of images created,

and the neglect of forms such as the flat-roofed earthen buildings found in the Moroccan province of Ouarzazate,

Or even the very diverse urban architecture of the great cities of Africa.

Use of the public dataset

And the generation of images of these kinds with those specific stimuli reflects broader issues of how the African continent is perceived online

From the lack of access to content in many African languages to the ongoing nature of the reductive narrative about the African continent across the web.

The nuance of the productions of “African architecture” by the image generator model is not visually obvious.

For the sake of comparison, the brisk “European architecture” described what appear to be grand streetscapes not out of place in Brussels or Paris,

But again, there is a lack of diversity, as the form eschews more modern buildings

and favors architectural forms that would fit the neoclassical mould.

Generative technical AI algorithms usually work by drawing on large subject-specific image banks to train their AI models.

For Midjourney, generic datasets are used to produce results generated by text prompts,

Naturally, biases in the publicly available images and how they are classified

will seep into the art created by models trained on these images.

Pictures of “African architecture” and “Vernacular architecture in Africa”,

Authored by artificial intelligence is the result of stylized captions for photos of African architecture online.

Not to mention how the visual results of “African architecture” can still be very one-dimensional when one enters that text into an online search engine.

Of course, one has the option of entering more specific AI text prompts rather than generic ones.

They include designations such as “African architecture” or “European architecture”.

Where the entry leads “1950s Nairobi architecture”

For example, it shows images of skyscraper-lined avenues in the Kenyan capital interspersed with the greenery of Uhuru Park.

– Blur shapes reminiscent of the Teleposta Towers and the Times Tower in the background.

African architecture

But the use of these more subtle stimuli still means that the images created from broader stimuli in the context of “African architecture”

Suffer from overly generalized perceptions – reinforcing a scenario that repeats the pre-formality of the visual idea of African architecture.

Much has been said about the type of knowledge that prevails in machine learning

and the number of algorithms that do not accurately represent the global context in

which we live. And as designers, and artists, and ordinary amateurs

– In the still early days of competent AI image generators –

continue to explore and test creative concepts through software,

It is worth considering how much these speculative images may end up reinforcing stereotypes that the world would do well to stay away from.

For more architectural news

العربية

العربية